preamble

writing a renderer is actually quite difficult. but also educational. the further along, the more is learnt of the subtleties and difficulties involved. here are some items of knowledge my moderate journey has turned up so far.

architecture

the cornell division is a good one, rendering algorithmics being composed of: global light transport, local light interaction, and perceptually informed imaging. its a simple and almost obvious partitioning, but a good basis.

making a renderer do everything fast and well is fairly difficult, making it do everything reliably and automatically is very difficult.

an iterative and incremental development process is important. a 'spike solution' that will draw some kind of image from an early stage supports motivation, and assists early defect identification.

unit testing is important: pieces must be verified at a simple level because many defects cannot be perceived in the complex mixture of the resulting images. unfortunately the nature of graphics software - complex, numerical - means writing adequate tests can be as difficult as writing implementations, so a certain amount of discipline and patience is required.

generally, an xp or unified style process is suitable.

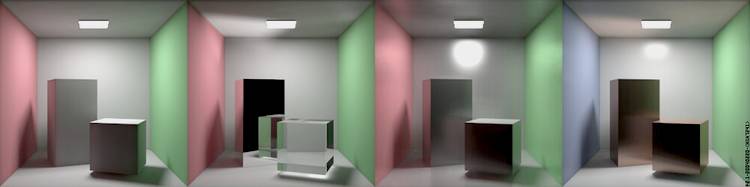

one integration test is to compare images produced by direct lighting with no photon mapping, to photon mapping of first hit photons with no direct lighting. average image luminance should be closely similar.

light transport

photon mapping really is the technique of choice. its simple, general and effective - better than anything else. for the renderer writer other approaches to light transport can be easily dismissed: path tracing is too noisy, bidirectional path tracing fails with non diffuse reflection, radiosity is not general and too limited in various ways, light fields are far too large. dont bother trying any of them.

a good, general, simple strategy is: direct visualisation of photon map, with separate direct lighting, and special cases for: direct lighting through flat glass, sky and sun illumination

- good sharp shadows where theyre usually produced - by direct lighting

- good soft shadows where theyre usually produced - by indirect lighting

- but: fails to keep sharp shadows produced by direct lighting through glass or mirror... hence the special case to allow shadow rays to see through flat glass - covers most occuring failures

- the noise in the photon map, which appears as a bumpiness, is quite controllable by adjusting the number of photons in the estimate, so that it will then even out over a small number of frame iterations.

- the weakness is then with thin objects standing away from other things - chair legs, for example. they will tend to be too dark in indirect illumination, because a very high resolution of photons would be needed to put enough onto their surface to make a good estimate.

a more complex strategy is something like the full jensen one: ray traced gather to indirectly visualise photon map and emitters, with sampling distribution informed by local photon map values and brdf

- cures the thin objects weakness

- maybe better handling of direct illumination

- maybe more robust and consistently good handling of general peaks in inward illumination

- but: more code, more computation

- uncertain that the benefits outweigh the extra work

the main problem in automation is control of photon distribution and storage in a view oriented way.

light interaction

the axis of rendering is the scattering event, the local light interaction. the gathering of light coming in, and the calculation of light going out, at a point on a surface. everything is oriented towards doing a good job of this.

perfect diffuse and perfect specular surfaces are easy to render, its the moderately glossy surfaces that are difficult. unfortunately many artificial objects are in this intermediate category.

its difficult to take a general brdf as a black box and render effectively and efficiently. splitting brdfs into parts that can give a measure of their pointiness helps a lot. since brdf models available are really quite primitive this isnt a restrictive or onerous requirement.

local interaction is well handled by: stochastically choosing, depending on the pointiness of the brdf, either photon map estimation or ray propagation. its important to apply a 'gain'-like distortion to the pointiness: if it is near (say 10%) the ends of its range, it should be pushed all the way out - this eliminates isolated sampling effects (bright spots usually) that occur too infrequently to be averaged out well. then, if ray propagation is chosen and the brdf isnt a perfect spike or extremely pointy, emission is not included from the surface hit, but instead direct lighting is evaluated separately through the brdf - this only performs poorly for emitters occupying a large solid angle to the surface point, which is a very unusual case. (separating emitters is how ward does interaction in 'radiance')

a remaining problem is glossy interaction from concentrated bright areas (eg: surface close to an emitter, or sharply specular reflection of emitter), which is intractably noisy. none of the 'special arrangements' - photon mapping, eye ray propagation, separate direct lighting - takes care of this case. but i think i have a good idea...

the optimal montecarlo sampling distribution is not importance - putting more samples where the function is larger, but putting more samples where the function is changing most. so: use importance scaled by its derivative plus 1, for the probability density, and divide the sample values by the derivative plus 1. perfect specular would still be a spike, and perfect diffuse still constant. the brdf importance can be augmented with estimates of incoming light from the photon map. these would have to be built numerically, which requires significantly more work in code and execution than simple importance sampling. whether its worth it is untested.

despite its separateness in mathematical expressions of interaction, the cosine term must be incorporated into the brdf concept, since it doesnt apply in the perfect specular case.

the obvious representation of the brdf for a surface point as a software class is deficient. the rendering strategy will be built from a variety of brdf uses, more isomorphic to various rendering equations. in class oriented form these are broken across different parts of code and are made more difficult to grasp. some attention is required to devise a more appropriate pattern.

imaging

if ward doesnt mention in the tonemapping graphics gem, then its important to also include a gamma transform of between 1/2 and 1/3 after the scaling, to simulate perceptual response - otherwise images look much too contrasty, shadows are too dark and indirect illumination is almost invisible. poynton suggests use of 1/2.2 faute de mieux.

the best output image format is png. it allows all common pixel depths and alpha, it permits color space and gamma data, its lossless compressed, its a universally supported w3c standard, and theres a solid library available to reuse. (its even possible to abuse it a bit to store ward realpixel images.)

other

frame-coherence of stochastic sampling is preferable. that is: in the eye path tracing phase, every stochastic used in making a pixel is shared for all pixels in that frame. although it isnt such a 'good' sampling, aesthetically its much better because noise is eliminated entirely from most of the image.

quasi-random sequences are significantly superior to general pseudo-random sequences, by being less noisy and giving a smoother result faster. aliasing doesnt appear to be a problem. a lot are needed though: about 100 ideally, however this many probably wont be available, so some careful rationing will be required. also, particular pairs of sequences (which is the way many will be used) produce very unsatisfactorily regular patterns, so every pair must be tested by plotting.